web3

What If We Designed AI Around What Actually Matters?

Head over to our Learning Hub to become a Wanderer and unlock access to the full video class, along with many others.

We are building systems that will eventually shape everything—from how we work and learn to how we make choices about our health, relationships, and even identity. The question is: what values are we baking into them?

Most mainstream AI discourse focuses on power, scale, and speed—how fast models are improving, how broadly they can be applied. But beneath the hype and hand-wringing lies a quieter, more foundational concern: what do we actually want these systems to optimize for?

Oliver, co-founder of the Meaning Alignment Institute, has been working on this exact question. And his concern isn’t about whether AI will become evil or sentient or decide to turn the lights off on humanity. It’s subtler than that—and maybe more alarming because of it. It’s about the possibility that AI will simply reflect back our worst instincts, codify them, and scale them to the point where we no longer recognize ourselves in the systems we’ve built.

When Behavior ≠ Values

Most AI systems learn by observing human behavior. They see what we click on, how long we dwell on a page, what we buy, and when we stop scrolling. This behavioral data is fed into models that try to infer what we like—and then serve us more of it.

The logic here traces back to the idea of "revealed preferences" in economics: that what people do reflects what they want. But most of us—especially anyone who's ever binge-watched a reality show or eaten a fourth cookie at midnight—know that’s a fragile assumption.

We don’t always act in line with our values. In fact, many of the systems we engage with are designed to exploit that dissonance: to pull us toward the things we can’t resist, not necessarily the things that make us feel fulfilled, proud, or even remotely okay the next day.

And yet, the more data these systems gather, the more confident they become in those behavioral signals. The risk, as Oliver puts it, is that AI begins optimizing not for who we aspire to be—but for a statistical caricature of who we’ve been when tired, distracted, or overstimulated.

The Danger of Smooth Systems

One of the key ideas in the paper Gradual Disempowerment is that AI doesn’t need to rebel against us to harm us. It simply needs to become so effective at managing our decisions that we stop noticing we've ceded control.

These systems get better at predicting what we’ll do next. So we get fewer prompts to reconsider, fewer forks in the road, fewer surprises. Over time, we become passive participants in lives increasingly curated for convenience.

Imagine an AI that books your travel, chooses your meals, filters your news, and nudges your social calendar based on past behavior. It might be incredibly accurate, even helpful. But it may also gently eliminate friction in ways that flatten out the texture of your life. You’re no longer challenged. You’re no longer choosing. You’re just... coasting.

That’s disempowerment—not in the form of surveillance or control, but in the erosion of agency and imagination.

What Makes a Good Life?

This is the question Oliver keeps returning to. And it’s not a rhetorical one. At the Meaning Alignment Institute, he and his team are actually trying to model human values—not just based on what people do, but by engaging them in reflecting on what matters.

This means asking people about the moments that have given their lives meaning. The relationships, choices, and projects that they feel proud of. The regrets they’ve learned from. The kind of future they’d want to grow into—not just the future an algorithm predicts.

It’s messy, subjective, and difficult to capture in a dataset. But it’s also how we begin to build AI that serves us as whole humans, not just as data points.

The Real Risk Is Not Catastrophe—It’s Mediocrity

What Oliver is articulating is a version of the future that isn’t science fiction—it’s happening right now. Recommendation engines already shape the cultural pipeline. Predictive algorithms guide hiring, sentencing, education. In many cases, these systems work too well—and that's precisely the issue.

The real risk isn’t that AI will become a rogue superintelligence. It’s that it will become an invisible force that quietly steers our choices, rewards our worst habits, and gradually drains our lives of depth, reflection, and meaning.

What’s needed is a shift in the design mindset: away from maximizing engagement and toward fostering the conditions for a good life. That means taking seriously the messiness of human values, and creating space for disagreement, growth, and even failure.

Designing for Reflection, Not Just Reaction

This isn’t about rejecting technology or trying to halt progress. It’s about redirecting our creativity. Imagine AI systems that help people clarify their values rather than just mirror them. Systems that challenge us, support our better impulses, or nudge us toward long-term goals—not just short-term dopamine.

That’s not utopian. It’s just harder than what we’re doing now.

But maybe the future worth building isn’t the one that feels most efficient. Maybe it’s the one that still leaves room for us to be surprised, to change our minds, to become something more than the sum of our past behavior.

Because at the end of the day, a system that understands what we do is powerful.

But a system that understands what we care about?

That could be transformative.

For more on this quiet shift in power and how to resist it, check out the paper “Gradual Disempowerment” and the work of the Meaning Alignment Institute.

What to read next

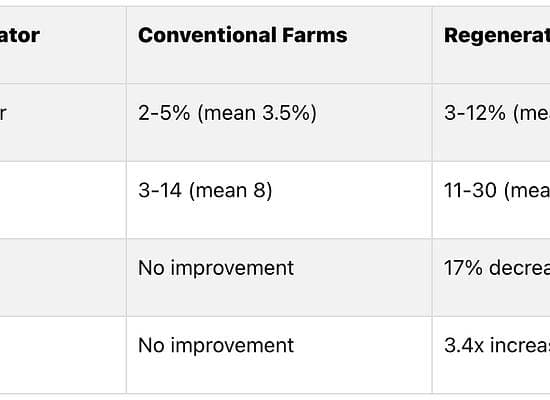

Regenerative Agriculture vs Conventional Farming: Key Differences

Across Europe, from the arid landscapes of Portugal's Alentejo to the rich soils of Central Europe, farmers are facing unprecedented challenges. As climate chan...

Evgenia Emets: Art, Regeneration, and the Rise of Forest Sanctuaries

Head over to our Learning Hub to become a Wanderer and unlock access to the full video class, along with many others.Evgenia Emets doesn’t just discuss regenera...

Natural Assets - The New Economy of Nature

Head over to our Learning Hub to become a Wanderer and unlock access to the full video class, along with many others.The New Economy of Nature: How We’re Revalu...